New architectures for online collections and digitization

Shyam Oberoi, Royal Ontario Museum (ROM), Canada, Kristen Arnold, Dallas Museum of Art, USA

Abstract

In 2013, the Dallas Museum of Art (DMA) received a generous grant to reinvent its approach to online collections. While many museums provide online access to their collections and many digitization projects are currently underway, the DMA sought to unify the content-production processes of object digitization with the publishing and aggregation of collections content to the Web. In this paper, the authors will propose a new digital framework for online collections that integrates digitization workflows, internal content-harvesting strategies from multiple internal repositories, and the creation of in-house crowdsourcing tools for rapid image and metadata management. By integrating process analytics with the framework from the beginning, the tools facilitate the ability to monitor workflows and allow staff to explore the collection as a whole. In the future, this approach will allow the public to explore the collection as a whole and not simply as a series of pages. By leveraging a schemaless underlying data architecture, the framework facilitates the integration of metadata and media assets, as well as the modeling of aggregated external content. This approach allows for a flexibility in data modeling that promises to scale to large numbers of items, but also to other forms of data and object models that are currently unknown. The authors will document a rapid digitization approach that uses the digital collection framework as a key tool in object selection, verification, and enhancement. The process includes a collaborative selection and review with curatorial, collections management, and digital imaging staff.Keywords: Online Collections, digitization workflows, crowdsourcing, data architecture, digital asset management

1. Introduction

By 2015, the question for museums should no longer be, “Should we put our collections online?” but rather, “Why haven’t we put our collection online?” Without minimizing the real technical challenges of such an endeavor or trivializing lingering residual bias that may exist in certain corners of certain institutions, the expectations in terms of audience are clear: if information is not available online, then it simply does not exist. And while there is still a real digital divide within our audiences, a recent Pew study illustrated the overall trend: among eighteen to twenty-nine year olds, 77 percent of those with a household income under $30,000 still had a smartphone; among that same demographic group, those with a household income of $75,000 or more reported usage at 90 percent (Pew Research Center, 2013).

As our audiences increasingly live online, museums have responded in kind by leveraging the social-media platforms that our visitors engage with. But at their core, these platforms are still conduits for certain types of content: Twitter for telegraph bursts, Pinterest for images. LaPlaca Cohen’s 2014 Culture Track report neatly summarizes: “What drives participation? Content, value and being social.” In order words, what these platforms require in order to be relevant, interesting, and engaging is original content, and the greatest store of information that any museum possesses is about its own collection. Since the nineteenth century, museums have been photographing their collections; they have been documenting and researching and arguing about them for as long as museums have existed. An IMLS National Study from 2008 addressed with some definitiveness questions that we still hear being asked: that “libraries and museums evoke consistent, extraordinary public trust among diverse adult users,” and as such information on their websites is more likely than most to be trusted by the public; and ”the number of remote online visits is positively correlated with the number of in-person visits to museums” (Griffiths & King, 2008). In other words, Web users view this information as complementary, rather than discouraging for an in-person visit. With the multitudinous rapidity of technological change, it may be easy to forget that it is only in the last quarter century that we could even dream of making this wealth of information available to anyone in the world, immediately, for free. Even today, for many museums, putting their collections online unfortunately remains a dream.

2. Requirements for an online collection

Prior to relaunch in August 2014, the Dallas Museum of Art did have, via a not-well-integrated microsite, around one-third of its collection online. This microsite suffered from the typical museum website complaints: inefficient search, awkward presentation of images and metadata, unintuitive user interface. Compared to our overall site traffic, the number of visits to this microsite was essentially a rounding error.

Figure 1: the DMA’s online collection, prior to August 2014

Setting aside for a moment the question of appropriate platform or technology, we had separately determined that as an institution it was important for us to publish our entire collection online. The reasoning went beyond transparency and openness: we felt that we needed to more positively and strongly broadcast the fact that the Dallas Museum of Art has a deep and encyclopedic collection. So as we were determining how we wanted to improve upon this existing framework, we spent time collaborating and documenting what we considered to be the core requirements for a modern online collection:

- To make available online all of the museum’s accessioned objects, regardless of the current state of cataloging or image availability/quality

- To publish and cross-link metadata for these objects, even if this information resides in repositories outside of the museum’s purview

- To distinguish between works under copyright and works in the public domain, and present images of the highest quality in accordance with existing copyright law

- To provide, where available, high-resolution images for zoom and download

- To support a responsive design

- To integrate with existing social-media platforms

- To improve search accuracy but also provide serendipitous results

- To facilitate SEO via persistent “friendly” URLs

With these requirements in mind, we turned our attention to making them happen.

3. Buying software, building software

It is probably not too much of an exaggeration to say that there are as many ways of publishing a collection online as there are online collections. While a few turnkey solutions do exist, they are generally limited in scope and functionality, and vendor specific. For organizations with disparate data sets, legacy systems, or both, there is generally no one simple solution; as a consequence, each builds (or has built for them) something specifically tailored to that institution’s needs and habits.

The Dallas Museum of Art was in a not-dissimilar situation. We had a collections management system (TMS) and images on a server; we had certain guidelines for cataloging object information that had been more or less adhered to. We had a revised list of requirements that we believed were essential to the success of our online collection. Surveying the landscape, we quickly decided that we would need to come up with a different approach, not just because of our near-term requirements, but also to sustain us in the long term as internal cataloging standards, institutional workflows, and broader community initiatives (linked open data, metadata taxonomies) matured and evolved.

As mentioned above, one of the primary goals of this project is the complete digitization of the museum’s accessioned collection. Of the DMA’s 23,000 accessioned objects, only 10,627 had images of any kind, some of which were scans of analog slides or transparencies, or low-quality documentary images. Original images were stored in a local network-attached storage (NAS) but were generally unavailable to staff, while low-quality derivatives were linked up in TMS.

TMS had long been in place as the system of record for the DMA’s collection information, but in order to provide a more robust and accessible place for this images, the museum needed a digital asset management system (DAM). For that, we turned to Piction and began the process of ingesting original collection photography into the DAM, and linking those images to the appropriate object in TMS. This linkage occurs automatically on file ingest; the Piction loader parses the name of the image file and then attempts to match that name to an accession number in TMS; if successful, the internal TMS ObjectID is recorded as a metadata value on that asset in Piction. All photography is shot and archived as high-resolution TIFs, along with predefined JPG derivatives that are also automatically created on asset ingest. Just as DMA staff use TMS as the system of record for changes to core collection information, Piction is now being integrated into staff workflows as the system of record for the museum’s digital assets.

Figure 2: various views of a DMA object in Piction (digital asset management)

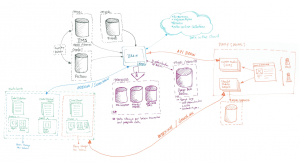

With these two internal content silos in place, we set about scoping and building a framework to support content aggregation and dissemination. We call this the Brain:

Figure 3: content flow within Brain (DMA)

At the heart sits Django, a Python Web framework, with MongoDB, a document-oriented database, aka a schemaless or noSQL DB. As Martin Fowler says in “Schemaless Data Structures” (2013): “Being schemaless reduces ceremony (you don’t have to define schemas) and increases flexibility (you can store all sorts of data without prior definition).” In other words, being schemaless allows us to rapidly make adjustments and additions to the specific types of content harvested. In terms of infrastructure, Brain resides on a virtual machine inside the DMA’s firewall, but harvested images are stored in Amazon’s S3 cloud. Though Brain currently supports a number of different content aggregations (for example, data from the DMA’s Friends program), for this discussion we will limit ourselves to the object information from TMS and the images associated with those objects in Piction. From TMS, we retrieve a predefined set of metadata fields (for object tombstone, constituent, geography, etc.); from Piction, all available JPG derivatives of that object along with technical EXIF/IPTC/XMP image metadata. Each object may have multiple images, and multiple derivatives are created and cached by Piction and harvested by Brain for performance optimization (in general, a thumbnail is provided for search result display, a medium-size JPG for the main object page, and a high-res JPG at original dimensions for image download). This is done via a series of mapping classes (i.e., descriptors that define where the data will be imported from and how this new data should be treated).

Since the digitization project is rapidly creating new images and reviewing metadata for existing records, it was critical for us to be able to ensure rapid aggregation and dissemination. Our current workflow has images from the previous day ingested into Piction in the morning, metadata changes made to TMS during the workday, and the harvest running every night. While the harvest is running, it will crawl each record in the source repository and honor all the declared relationships at that source; only if the data integrity check for the record passes will it be sent across for transformation. ElasticSearch, an open-source search engine based on Lucene, is used to index and facet the harvested data. A log is produced for monitoring progress and recording errors in data integrity, and event listeners are used throughout to track status; these are also useful for extending functionality (for example, a signal listener is set to automatically update the index of a record in ElasticSearch as soon as that record has been harvested in order to maintain near real-time results).

Using the Django REST Framework (DFR), Brain supports both JSON and XML responses (both read-only), as well as model serialization; these API calls are in turn used to return the harvested metadata and images to the DMA’s online collection. The following is an example of an object record represented in Brain:

Figures 4 and 5: GET API for an object (4) and a different view of that same object (5) via Brain (DMA)

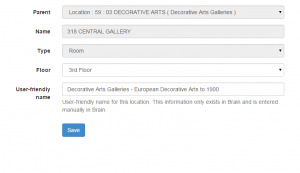

For us, Brain is not simply the mirror for the original content in TMS and Piction, but also a source for more subjective improvements for presentation online. Two examples: while the Dallas Museum of Art has extremely specific object-location information in TMS, this information has been cataloged in a way that registrars understand (“03 DECORATIVE ARTS;318 CENTRAL GALLERY” is an example of a single TMS site and room). Aside from being obscure, this information also does not match any existing signage or maps that the museum provides to visitors. In order to translate these specific (and essential) registrar locations into something that the online visitor would understand, we needed to set up a crosswalk:

Figure 6: crosswalk of TMS object location with visitor-friendly museum location, via Brain (DMA)

Of much more importance, though, are the image review and presentation tools that were created in Brain in order to both flag images for additional post-production by DMA staff and arrange images for optimal online presentation. In the screenshot in Figure 7, we see all available images harvested from Piction for a TMS object record. From here, a series of flags can be set on each image to indicate whether it needs to be cropped, rotated, flagged for some other reason, or suppressed from the public website all together (these flagged images can then later be reviewed and corrected by imaging staff). Images here can also be dragged and dropped into any order, allowing imaging, curatorial, and collections management staff to choose the images and set the order of presentation for each object.

Figure 7: image review, selection, and ordering for an object in Brain (DMA)

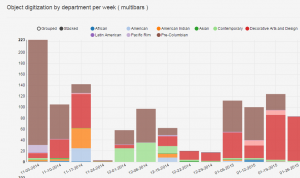

Finally, a dashboard in Brain contains custom-build analytics where DMA staff can check daily and weekly progress of content production. Charts are available to show objects digitized by department, classification, and location within the museum. A goal thermometer at the top lets us know how much has been done to date, and how many objects remain to be digitized. From May 2014 (when we started digitization) to January 2015, we photographed over 3,500 objects with over 16,000 images, or an average of about 100 objects per week.

Figures 8 and 9: charts in Brain report the number of objects digitized by week (8) and objects with and without images by classification (9)

4. Digitization production workflows

With our content silos (TMS for collections metadata, Piction for images of these objects) aggregated into Brain, we now had a tool in place that could be leveraged for a number of different essential functions: providing staff with a unified resource to consult the level of completion for image and object information; analytics to track status of image production and ingest; and a simple toolkit to make adjustments to the presentation of this information online. With this, we were now ready to establish a rigorous production workflow with the multiple stakeholders across the museum who would be involved in the digitization project.

Each week, our registrars consult Brain in order to determine undigitized objects; using key elements of object metadata—physical object location, curatorial department, object type and material (for example, small gold figurines, or works on paper)—the registrars will assemble object packages in TMS. For these distinct object sets, care is taken to combine similar objects together (that is, objects in physical proximity to each other, of similar size, of similar materials) in order to reduce photo studio setup time. These object packages will then be shared with curators for review and modification, as well as with imaging staff in order to confirm the necessity for new photography. Once confirmed, preparators will stage the objects so that they can be further examined by curatorial and conservation staff; while out, these objects will also be measured to confirm dimensions; cleaned, if necessary, prior to photography; and examined for any additional inscriptions or markings. From there, the preparators will move the objects onto the photographers’ sets for digitization. At a minimum, all two-dimensional objects are shot front and back, and if possible framed and unframed; all three-dimensional works are shot from at least four to six angles (front, back, left, right, top, and bottom). Depending on the object, additional details and angles will be generated. At the end of each day, the photographers will transfer their shots to a network share, where they are ingested overnight into Piction and then harvested into Brain. Throughout this workflow, not only are we generating professional digital imagery for each object in our collection, but we are also confirming, and in many cases augmenting, basic tombstone information about the objects as they are being shot.

Figure 10: digitization workflow for the DMA

5. Publication online

The current version of the DMA’s online collection looks nothing like our previous microsite, and yet it’s worth noting that we intentionally constrained ourselves during redesign to conform to the overall look and feel of the rest of the existing website. Even though we were designing the online collection from scratch, we did not want it to appear as another microsite, but rather a seamless part of the museum’s Web presence. This led us to certain real limitations (in terms of color, layout size, etc.) but also challenged us to think creatively around optimal presentation of information within these constraints. Like the rest of dma.org, the online collection is built on Drupal; unlike the rest of the Web content in the CMS, the information here is retrieved directly from Brain via the API calls (though each object is cached as a node in the CMS for performance optimization; cache invalidation is performed only where object metadata or images have changed in Brain).

Figure 11: the DMA’s online collection, landing page (August 2014)

The new landing page has three entry points for the Web visitor: search, browse by facet, or a random assortment of highlights from the DMA’s collection. From a technical perspective, just about everything in the online collection is the result of a search: the browse categories return search results for the most common artists or materials; the number of objects per department or location; the colors via the palette extracted by Brain; or the results between two numeric dates. Since the search box is the most common entry point, we enabled type-ahead auto-complete on certain indexed fields (artist, title) to assist with spelling errors, but we will also allow users to search on any piece of object metadata in the collection.

Figure 12: search results from the DMA’s online collection

Using ElasticSearch, we can facet and further refine search results, again using the same predefined categories: objects onview; with images; by artist, location, etc. Multiple facets can be selected at once (for example, onview objects in a certain location of a certain classification). This faceting of results by predefined categories is familiar to users of Amazon and similar e-commerce sites, and provides users with a simple way to further refine their results without additional typing. It’s perhaps also worth noting that we deliberately avoid anything that resembled an “advanced” search, where a user would be forced to know which fields contained which information.

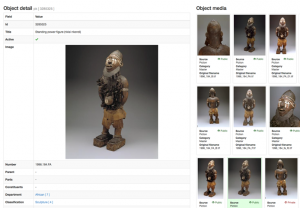

Figure 13: an object page in the DMA’s online collection

A challenge with collection object pages is that they can be a dead-end for user inquiry: a user searches for a work and then (hopefully) finds that work and then bounces away from the site. In order to allow our visitor to continue his/her exploration, we wanted to provide as many alternate pathways as possible. To that end (and using the same metadata faceting described above) multiple cross-links, in red, are available for specific metadata fields (in the example above, clicking on “African” will execute a search for all objects in the African department). Similarly, at the bottom of each object page, we display (via ElasticSearch) additional recommended results based on the characteristics of the current object: similar objects (i.e., objects by the same artist/culture/title); objects from the same department; objects in the same location in the museum; and even objects with the same color palette.

Since digitization is a key part of this project, multiple images for an object are supported via a simple image slider. Objects with rights restrictions are constrained to thumbnail size per AAMD Policy on the Use of “Thumbnail” Digital Images in Museum Online Initiatives (2011), but the rest are available for download as high-res JPGs at original size. All high-res images are also zoomable at full screen, using OpenSeadragon. For “backwards compatibility,” the entire object page can be printed or downloaded as a PDF; since just about everyone has an interest in easily sharing online content, ShareThis is available.

Like the rest of the DMA’s website, the online collections’ design is responsive and supports a wide range of devices and form factors (on average, more than 40 percent of the DMA’s overall Web traffic comes from mobile devices.) Each object in the collection is given an SEO-friendly URL, comprising /artist/title or /department/title. Instead of /collection/object/3285325/ from Brain, we publish /collection/artwork/african/standing-power-figure. The URL for every object, along with the rest of the content on the DMA’s website, is available in an XML sitemap for search engine discovery. Per Google Analytics, traffic to the new online collection now accounts for more than 17 percent of total page views for our entire website.

6. Next steps

All the tools built by the DMA to harvest, aggregate, and publish its collection information and images utilize technologies that are free and open source, but this “free” should be understood as the modifier in “free puppy” rather than “free beer.” There are still real costs associated with the care and feeding of this framework: technical resources, cloud-hosting costs, storage, and maintenance. We believe that these costs are worth incurring since we have larger ambitions for what an online collection can be.

This DMA’s new online collection launched in August 2014, but for us it is still just a first iteration. At this moment, and through the same grant that is supporting object digitization and application development, a team of researchers (aka “digital collection content coordinators”) is reviewing the most important and public works in the DMA’s collection. We don’t yet know the specifics around how the results of their research will appear, but we know absolutely that it will be another set of content that will be aggregated in Brain, and that it will enhance and complement the existing online collection. In addition, there are still audio and video materials to be integrated, exhibition histories, and linkages to existing taxonomies for subject terms, artists, and geography. We have come a long way in a short time from where we were, but we still have far to go.

References

Association of Art Museum Directors. (2011). AAMD Policy on the Use of “Thumbnail” Digital Images in Museum Online Initiatives.

Fowler, Martin. (2013). “Schemaless Data Structures.”

Griffiths, Dr. José-Marie, & Donald King. (2008). Interconnections: The IMLS National Study on the Use of Libraries, Museums and the Internet.

LaPlaca Cohen. (2014). Culture Track Top Line report.

Pew Research Center. (2013). Internet and American Life Project. April 17–May 19. Tracking survey.

Cite as:

. "New architectures for online collections and digitization." MW2015: Museums and the Web 2015. Published January 31, 2015. Consulted .

https://mw2015.museumsandtheweb.com/paper/new-architectures-for-online-collections-and-digitization/